What are the main key features of Hadoop?

Top 8 features of Hadoop are:

- Cost Effective System

- Large Cluster of Nodes

- Parallel Processing

- Distributed Data

- Automatic Failover Management

- Data Locality Optimization

- Heterogeneous Cluster

- Scalability

1) Cost Effective System

Hadoop framework is a cost effective system, that is, it does not require any expensive or specialized hardware in order to be implemented. In other words, it can be implemented on any single hardware. These hardware components are technically referred to as commodity hardware.

2) Large Cluster of Nodes

It supports a large cluster of nodes. This means a Hadoop cluster can be made up of millions of nodes. The main advantage of this feature is that it offers a huge computing power and a huge storage system to the clients.

3) Parallel Processing

It supports parallel processing of data. Therefore, the data can be processed simultaneously across all the nodes in the cluster. This saves a lot of time.

4) Distributed Data

Hadoop framework takes care of distributing and splitting the data across all the nodes within a cluster. It also replicates the data over the entire cluster.

5) Automatic Failover Management

In case a particular machine within the cluster fails then the Hadoop network replaces that particular machine with another machine. It also replicates the configuration settings and data from the failed machine to the new machine. Once this feature has been properly configured on a cluster then the admin need not worry about it.

6) Data Locality Optimization

In a traditional approach whenever a program is executed the data is transferred from the data center into the machine where the program is getting executed. For instance, assume the data executed in a program is located in a data center in the USA and the program that requires this data is in Singapore. Suppose the data required is about 1 PB in size. Transferring huge data of this size from USA to Singapore would consume a lot of bandwidth and time. Hadoop eliminates this problem by transferring the code which is a few megabytes in size. It transfers this code located in Singapore to the data center in USA. Then it compiles and executes the code locally on that data. This process saves a lot of time and bandwidth. It is one of the most important features of Hadoop.

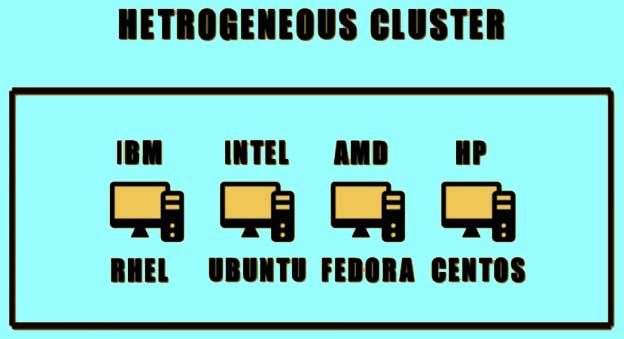

7) Heterogeneous Cluster

It supports heterogeneous cluster. It is also one of the most important features offered by the Hadoop framework. A heterogeneous cluster refers to a cluster where each node can be from a different vendor. Each of these can be running a different version and a different flavour of operating system. For example, consider a cluster is made up of four nodes. The first node is an IBM machine running on RHEL (Red Hat Enterprise Linux), the second node is an Intel machine running on UBUNTU Linux, the third node is an AMD machine running on Fedora Linux, and the last node is an HP machine running on CENTOS Linux.

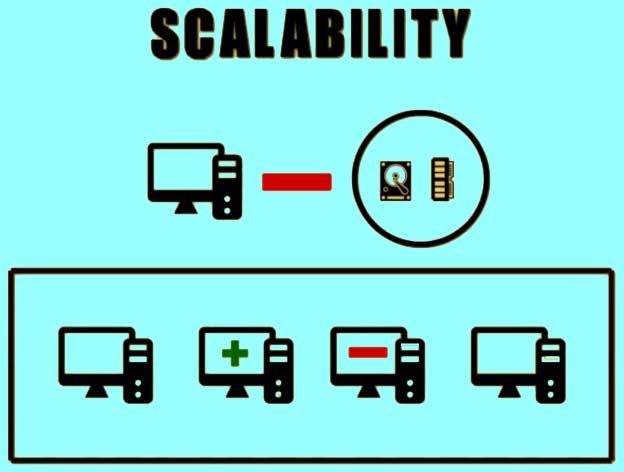

8) Scalability

It refers to the ability to add or remove the nodes as well as adding or removing the hardware components to, or, from the cluster. This is done without effecting or bringing down the cluster operation. Individual hardware components like RAM or hard-drive can also be added or removed from a cluster.

Read Next How Does Hadoop Works?